(674c) Bayesian Learning from Hybrid Models and Their Applications in Thermodynamics and Reaction Engineering

AIChE Annual Meeting

2020

2020 Virtual AIChE Annual Meeting

Computing and Systems Technology Division

Data-Driven and Hybrid Modeling for Decision Making

Thursday, November 19, 2020 - 8:30am to 8:45am

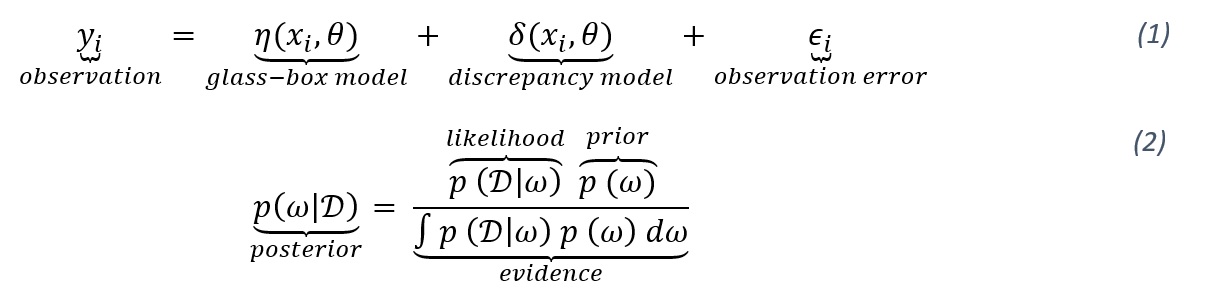

Epistemic or model form uncertainty arises from model inadequacies due to simplifying assumptions or neglected control variables. Aleatoric uncertainty is a consequence of random phenomena such as experimental variability and noisy observations. The seminal work by Kennedy and O'Hagan[4] introduced a Bayesian framework for the simultaneous quantification of aleatoric and epistemic uncertainty inherent in all mathematical models and is shown in Eq (1) (see attached image).

Experimental observations for experiment i is modeled using three components. The glass-box model η(.,.) contains physically meaningful global parameters θ and inputs xi. The stochastic discrepancy function δ(.) counteracts systematic bias in the glass-box model. Observation error εi is modeled as uncorrelated white noise, i.e., N(0,σ2I). Outputs of the Gaussian Process (GP) discrepancy function δi~ GP(μ(.),k(.,.)) follow a conditional normal distribution with the mean and covariance fully specified by μ(.), and k(.,.), respectively. The parameters of the kernel function, along with those of the white noise model (i.e. σ) are denoted as hyperparameters φ.[5]

The model parameters θ and hyperparameters φ are determined using Bayesian calibration, shown in Eq. (2) (see attached image). Bayesian model calibration interprets parameters ω as random variables. The standard workflow is to encode one's current belief in a prior probability distribution, observe data D, and apply Bayes rule, Eq. (2), to obtain the posterior probability distribution p(ω|D).[6] In our workflow, we use the posterior distribution from the calibration of the hybrid model as the uncertainty set in a stochastic program to minimize the uncertainty in the model predictions.

Using ballistic firing as an illustration,[1] we demonstrate how Bayesian hybrid models overcome systematic bias from missing physics by simulating computer experiments to hit a target using a single-stage stochastic program. We highlight how the hybrid model outperforms a pure machine-learning (data-driven, GP) model when the amount of data available is low (5 to 6 experiments). We argue that Bayesian hybrid models are an emerging paradigm for data-informed decision-making under uncertainty by discussing two case studies from chemical engineering. In the first case study, we use our workflow to design a continuously stirred tank reactor (CSTR) by calibrating a reaction kinetics model with oversimplified assumptions using Bayesian hybrid models. In the second case study, we use Bayesian hybrid models to fit thermodynamic data to equations of state and propagate epistemic uncertainty in using our workflow in designing a flash process. We contrast the merits of our technique against a pure machine-learning (data-driven) GP based workflows and conclude by advocating for a fully Bayesian decision-making methodology which provides probability distributions necessary for stochastic programming and enables iterative (sequential) design of experiments.

References:

[1] Eason, J. P. & Biegler, L. T. Advanced trust region optimization strategies for glass box/blackbox models. AIChE Journal 64, 3934-3943 (2018). doi.org/10.1002/aic.16364.

[2] Jones, M., Forero-Hernandez, H., Zubov, A., Sarup, B. & Sin, G. Superstructure optimization of oleochemical processes with surrogate models. In Computer Aided Chemical Engineering, 44, 277-282. 10.1016/B978-0-444-64241-7.50041-0.

[3] Eugene, E. A., Gao, X. & Dowling, A. W. Learning and optimization with Bayesian hybrid models. In Proceedings of the 2020 American Controls Conference, Accepted (2020). arXiv:1912.06269.

[4] Kennedy, M. C. & O'Hagan, A. Bayesian calibration of computer models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 63, 425-464 (2001). 10.1111/1467-9868.00294.

[5] Bishop, C. M. Pattern Recognition and Machine Learning (Springer, 2006).

[6] Higdon, D., Kennedy, M., Cavendish, J. C., Cafeo, J. A. & Ryne, R. D. Combining field data and computer simulations for calibration and prediction. SIAM Journal on Scientific Computing 26, 448-466 (2004). 10.1137/S1064827503426693.