Sections

The human brain tends to simplify high-consequence, low-frequency events, which can be particularly dangerous in process hazard analysis (PHA) development.

Duck, Duck, Goose is a traditional, much-loved children’s game that plays on the balance between expectation and sudden reality. Process safety management (PSM) deals with similar themes. If you replace the goose with a swan — particularly a black swan — you can highlight the frequency of the event. Frequency is a critical topic in process safety, where we try to determine the likelihood of a particular event occurring.

The term “Black Swan Event” was first coined in financial markets with the release of Wall Street trader Nassim Nicholas Taleb’s aptly titled book, “The Black Swan: The Impact of the Highly Improbable” (1). He describes a black swan event as having three key characteristics:

- it is unpredictable

- it has a massive impact

- after the fact, an explanation makes it appear less random and more predictable than it was.

Examples of high-consequence (massive impact) globally known events from multiple landscapes are shown in Table 1 (2–4). Many argued that the 2020 pandemic was a black swan event due to its unpredictability and death toll. Yet, when you assess the predictability of such an event, that argument is flawed, highlighting the complexity of incidents.

This article explores the complex nature of predictability and what the process safety industry can learn from psychological sciences and the financial market.

Background

Frequency and severity are common stumbling blocks in prediction and estimation, where the human brain can take shortcuts and make errors along the way. The value of prediction and estimation cannot be ignored; however, it can be improved through psychological understanding. This section details relevant process safety and psychological science definitions.

Process safety. To understand the risk a process, unit, or facility presents, two key elements must be understood: the likelihood of an event (i.e., frequency) and the magnitude (i.e., severity) of the impact of the event. The Center for Chemical Process Safety (CCPS) Process Safety Glossary (5) defines such terms as the following:

- Risk: a measure of human injury, environmental damage, or economic loss in terms of both the incident likelihood (frequency) and the magnitude (severity) of the injury or loss

- Frequency: the number of occurrences of an event per unit time (e.g., 1 event in 1,000 years = 1 × 10–3 events/yr)

- Severity: the maximum credible consequences or effects, assuming no safeguards are in place.

When using risk management tools, engineers are required to estimate the severity of a consequence and the frequency of an event. This article uses process hazard analyses (PHAs) as a point of reference. A PHA is a proactive review of process equipment and supporting procedures/activities that ensures the facility design and related PSM systems are properly managing the identified hazards.

Psychological sciences. The brain is a complex organ that controls thought, memory, emotion, touch, motor skills, vision, breathing, temperature, and hunger (i.e., body regulation). With so much being asked of the brain at any given moment, you can understand the brain’s need to create shortcuts and its propensity to make errors. Some of the shortcuts and errors that the brain regularly makes can be defined as the following:

- Heuristics: rules of thumb that can be applied to guide decision-making based on a more-limited subset of the available information. As they rely on less information, heuristics are assumed to facilitate faster decision-making than strategies that require more information (6).

- Biases: a display of partiality where an inclination or predisposition for or against something is expressed (7).

Cognitive bias, the main focus of this article, is a flaw in your reasoning that leads you to misinterpret information from the world around you and form inaccurate conclusions.

Several heuristics and biases, as well as their impact on estimating the frequency and severity of events, will be discussed further in the psychological framework section.

Black swans and cognitive bias in the literature

As mentioned, the starting point for this article is Nassim N. Taleb’s book that introduced black swans (1). First released in 2007, the book utilized the author’s experience as a derivatives/options trader on Wall Street to theorize the human tendency to find simplistic explanations for extreme-impact events that were deemed unpredictable. Following the financial crisis of 2008, Taleb released the second edition of the book in 2010, adding detail from the real-life example of the fragile markets that had failed to prepare for such a black swan event.

A controversial commentary provided in Taleb’s book is how difficult it can be to make predictions in environments subjected to black swans (and the general lack of awareness of the psychological barriers at play), given that certain professionals, while believing they are subject matter experts, are in fact not. Although the readers of this article may be experts in the field of process safety and risk management, they may not be as familiar with the principles of psychological science and cognitive bias. This article aims to equip readers with the basics of these principles to help close the gap that Taleb mentions.

Daniel Kahneman’s 2011 book, “Thinking, Fast and Slow” (8), can also supplement this discussion. This book examines the intricacies of the human brain and the patterns we can observe in human thinking and interpretation of data and situations. The late Kahneman was a Professor of Psychology Emeritus at Princeton Univ. and a Nobel Prize-winning economist.

Kahneman’s book (8) provides an intriguing commentary that breaks down where we can and cannot trust our intuitions, encouraging the reader to maximize the use of their “slow thinking” — taking emotion out of the equation and thinking with logic and intention. Some of the key considerations in this article are the workings of the mind that help prepare those tasked with event prediction (and, ultimately, risk assessment) to avoid future incidents.

Additional literature has also been reviewed, primarily focusing on current practices for modeling (9) and understanding (10) high-consequence, low-frequency events, as well as lessons we can learn from previous incidents in the chemical (11) and energy (12) industries.

Another example of applying financial principles and understanding to shed light on accident phenomenon can be found in Ref. 13.

Psychological framework

To understand how the human brain processes information and responds, we first must understand a simple mapping of the brain — the cortical center (used for thinking) and limbic center (used for emotions). The split concept of the brain is developed further as two systems of thinking. The terms System 1 and System 2 were first coined by psychologists Keith Stanovich and Richard West in 2000 (14) and developed further by Kahneman in 2011 (8):

- System 1 operates automatically and quickly, with little or no sense of voluntary control (i.e., emotions).

- System 2 allocates attention to the effortful mental activities that demand it, including complex computations (i.e., thinking — the subjective experience of agency, choice, and concentration).

With the foundation of brain responses set, the theme of prediction and the subsequent dangerous simplification can be explored.

Much of Taleb’s discussion on the black swan phenomenon is based on his depiction of two provinces that the world and events fall into: Mediocristan and Extremistan.

Mediocristan is where some events don’t contribute much individually, only collectively. According to the theory, when your sample is large, no single instance will significantly change the aggregate or total. An example of this can be drawn from a human’s calorie consumption over a year. Even the day with your largest calorie intake, e.g., Thanksgiving with a gluttonous dinner (approximately 4,500 calories), is minimal in comparison to the entire year’s consumption (approximately 800,000 calories) (15).

Extremistan is where events or inequalities are such that one single observation can disproportionately impact the aggregate or total. An example of which can be drawn from human wealth across a sample of 1,000 Americans. The world’s wealthiest (i.e., Elon Musk with an estimated net worth of more than $200 billion at time of writing) accounts for more than 99.9% of the wealth across the 1,000.

A simplified takeaway is that the Mediocristan province accounts for physical matters, whereas the Extremistan province accounts for social matters. With one of the defining characteristics of a black swan being its unpredictability (i.e., possessing a randomness beyond belief), it is not possible to have a black swan event reside in the Mediocristan province.

It is too easy to believe that most safety incidents are a result of a mistimed, unobserved, or unintended physical situation, and thus residing in the Mediocristan; however, human responsibility and interaction with processes could suggest existing within the Extremistan (i.e., liable to black swans).

This section details several key concepts that hamper human interpretations of events and, ultimately, the simplification of rare and impactful events — notably heuristics and biases, with anecdotes to further illustrate.

Confirmation bias. The first and perhaps most prevalent danger in human interpretation is confirmation bias, where people tend to seek out and interpret information in ways that confirm what they already believe. Confirmation bias encourages people to ignore or invalidate information that conflicts with their beliefs.

Consider Figure 1, where an arbitral variable is plotted against time (days). In Taleb’s example (1), he uses a turkey’s life in the 1,000 days leading up to Thanksgiving, and on day 1,001, the turkey’s life ends. If we were to review the first 1,000 days of the turkey’s life, we would be inclined to believe day 1,001 would be no different; however, confirmation bias encourages a naïve projection of the future based on the past.

▲Figure 1. Confirmation bias often arises in data analysis, and this is especially true for scenarios where major events do not occur frequently. In this case, the major event does not occur until day 1,001. The analysis will look much different if it only looks at the 1,000-day period before the event (1).

With prediction and estimation, numbers are often the basis of logic and reason. An early experiment on confirmation bias was performed by P. C. Wason (16), where he presented subjects with a three-number sequence (i.e., 2, 4, 6) and asked them to theorize the rule generating it. The subjects generated other three-number sequences to which Wason would respond “Yes” or “No” if they corroborated the rule. Confidence in their rules soon developed, e.g., ascending numbers in twos, ascending even numbers, etc. The correct rule was, in fact, simply numbers in ascending order. Subjects continued to generate sequences that would confirm their rules rather than disprove them.

The narrative fallacy. A heuristic shortcut that the brain likes to apply is through narration. We like stories, summaries, and, ultimately, simplification. We are vulnerable to overinterpretation and have a predilection for succinct stories over raw truths. We have a limited ability to look at facts without weaving an explanation into them, i.e., formulating a logical link.

Andrey Nikolayevich Kolmogorov was a respected mathematician who defined the degree of randomness, where humans tend to remove the randomness of the world by finding patterns, allowing for simplification (17). A full page of numbers written down would require significant effort to remember, but if we are able to find a pattern, the brain only has to remember that pattern. However, black swans lie outside of patterns and therefore would be omitted from the simplification.

Memory also plays a dangerous role in the retelling of stories. Take a joyful childhood memory: each time you retell the memory, you are in fact remembering the last time you told the memory, rather than the memory itself (1). The retelling generates a dangerous opportunity for the memory (story/narrative) to change at every subsequent retelling.

Behavioral naivety. “Humans are creatures of habit” is a common belief across several branches of learning (18). Yet, we also suffer from a serious case of self-delusion, believing we are stoic, conscious beings who are free-willed and set apart. This may be true in comparison to other creatures in existence, but perhaps not so evident when comparing oneself to a colleague, or further still, oneself when tasked with decision-making — i.e., your behavioral nature.

Gambling is a useful mechanism to explain one’s behavioral nature. Some of us are willing to gamble dollars to win a succession of pennies (short odds), while others are willing to gamble a succession of pennies to win dollars (long odds). In other words, your behavioral instincts may encourage you to bet that a black swan will not happen (long odds) or that it will happen (short odds) — two strategies that require entirely different behavioral approaches. Financially speaking, many people accept that a strategy with a small chance of success is not necessarily a bad one as the reward would be large enough to justify it.

Silent evidence. Silent evidence is the human tendency to view history with a lens that filters out evidence differing from our preconceptions, i.e., what extreme events (black swans) use to conceal their own randomness. Evidence that we do not have access to, or our inability to recognize data as evidence, does not mean that the evidence does not exist.

An example of silent evidence at play is a comparison of millionaires against victims of motorcycle accidents. If you were to survey a group of millionaires to understand their key attributes, the following may be revealed: courage, comfort with risk-taking, optimism, etc. However, if you were to survey a group of motorcycle accident victims to understand their key attributes, the following may also be revealed: courage, comfort with risk-taking, optimism, etc. Your brain could easily draw the connection across both groups of people, that their attributes were the cause of their success (wealth) or failure (accident). However, there is undoubtedly additional evidence with both groups to influence their outcomes, perhaps evidence we cannot see or that we simply do not recognize.

In some cases, the difference between two outcomes could quite simply be luck, something that is often overlooked when identifying success and/or failure, as it is difficult to quantify or recognize. The bias of silent evidence ultimately lowers our perception of the risks incurred in the past, particularly for those of us lucky enough to survive the risks we take.

The ludic fallacy. Taleb (1) coined the term the ludic fallacy (ludic coming from the Latin word for games, ludus) to describe the attributes of uncertainty we face in real life that have little connection to the sterilized ones we encounter in games. Returning to gambling, Taleb theorizes that gambling sterilizes and domesticates uncertainty in the world — we are trained to discover and understand the odds at play to support our daily decision-making.

This can be exemplified by the toss of a coin. Assume that a coin is fair — i.e., it has an equal probability of coming up heads or tails when it is flipped. If I have flipped the coin 99 times, and it has been heads each time, what are the odds of me getting tails on the next throw?

- Answer A: 50% due to independence between flips (19).

- Answer B: 1% due to the flawed assumption of equal opportunity.

Humans are too often found thinking inside-the-box, blindly accepting assumptions that are provided to us. The history of the coin flip should encourage us to question the assumption of a fair coin; however, the ludic fallacy often blocks that questioning.

Likewise, the engineer must learn to question underlying assumptions to avoid tunneled (inside-the-box) thinking and to better prepare for black swan events.

Key considerations

Predicting and accounting for black swans is difficult, especially within the financial landscape. However, that does not mean that they should be ignored. Taleb (1) detailed the extensive complications when using the human brain to predict and prepare for black swans, outlining the following warnings:

- do not ignore or invalidate information that conflicts with one’s beliefs (confirmation bias)

- resist the urge to oversimplify through pattern-seeking (narrative fallacy)

- acknowledge behavioral interferences between predictors (behavioral naivety)

- seek to uncover what we do not know or recognize (silent evidence)

- question underlying assumptions to avoid tunneled thinking (ludic fallacy).

Considering how predicting the future based solely on events from the past can be misleading, regularly updating our predictions as new data emerges is a step toward greater clarity. The art and science of prediction is developed in Philip E. Tetlock and Dan Gardner’s book, “Superforecasting” (20). One of the greatest strengths of a forecasting team is their ability to admit errors and change course accordingly.

Application to process safety management

Although direct comparisons between volatile financial markets and hazardous chemical processes are challenging, lessons in psychology can be applied to both to help stakeholders acknowledge and understand the existence of black swans and perhaps to attempt their prediction.

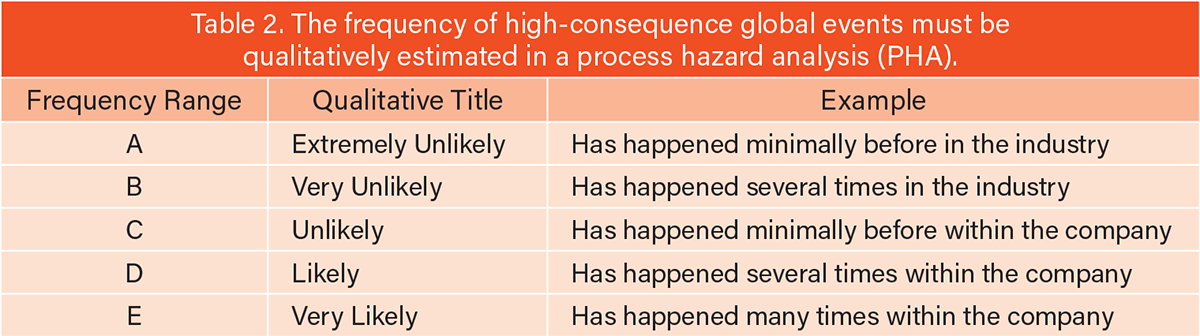

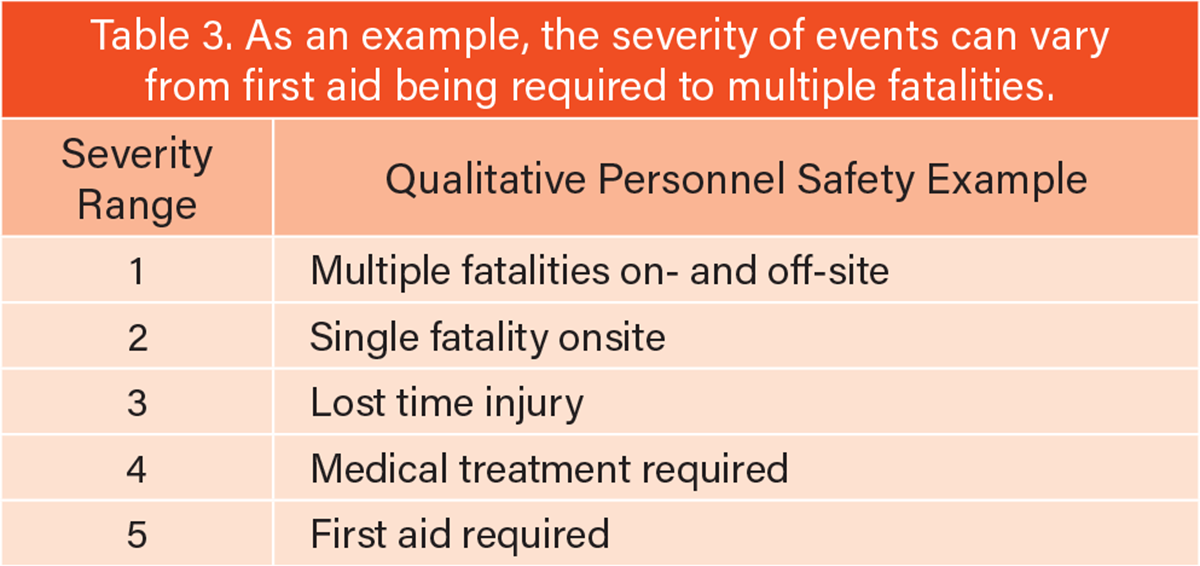

In the chemical process industries (CPI), PHAs are a common tool where teams are asked to qualitatively estimate the frequency and severity of a cause-consequence pairing (e.g., an event). This requires a form of simplification through qualitative interpretation, as shown in Table 2 (frequency) and Table 3 (severity).

Here, the argument is presented to focus first on the severity of the consequence, and then proceed to frequency estimation. Focus on the harm a scenario could cause rather than the plausibility of it occurring. The central idea of uncertainty (1) suggests that we should focus on what you can know (e.g., the extent of an explosion from a vessel) rather than predicting the likelihood of such an explosion, which you cannot know with complete confidence.

Simplification is undoubtedly required in PSM to account for limitations of cost, time, and resources. However, when a team is asked to qualitatively interpret frequency or severity, consider the following cognitive biases and fallacies and how they may impact the PHA:

- Confirmation bias. Appreciate that what has not happened before may still be a plausible outcome. For example, can a manual valve located 20 ft above grade be inadvertently left in the wrong position? Although access is limited, human error or poor work settings could result in valve misalignment.

- The narrative fallacy. Allow scenario development to be complex and intricate. For example, when actively ignoring any safeguards or control systems you have in place (during PHA), allow the mind to develop possible scenarios so that extreme consequences can be considered. Particular focus should be paid to areas of high vulnerability and nuances in design.

- Behavioral naivety. Understand the behavioral nature of your team through personality testing and build PHA teams that complement alternative natures. For example, run simple behavioral tests before workshops to gain an idea of which approach to a simple gambling scenario one might take. Ensure that there is a mix of approaches across the technology experts, operations, maintenance, safety representatives, etc.

- Silent evidence. Look beyond previous incidents and our explanations behind them. For example, fight the temptation to review previous incidents or PHA reports before a workshop, encouraging the team to develop unique scenarios first.

- The ludic fallacy. Encourage novel approaches where certain assumptions of what can and cannot occur are questioned. For example, review your underlying assumptions as a PHA team before the workshop, considering collectively what scenarios you may be restricted in developing. Retaining some assumptions will be required, but the process of questioning the assumptions as a team will encourage a thorough understanding of why the assumptions are there in the first place. Training PHA teams to observe the human inclination for oversimplification and the biases and heuristics at play could greatly prepare teams for prediction tasks.

In closing

Black swans have occurred and will continue to occur. It is essential to review them within the CPI, your company, and your facility to acknowledge and expose their unpredictability and randomness.

This article does not suggest that we should try to predict black swans precisely, but by considering at least the possibility of future black swans, they may reveal themselves as “grey swans” — unlikely events that lack randomness. It is much more worthwhile to invest in preparedness rather than prediction. However, where prediction is required in PSM, it can help to be aware of the logical fallacies and cognitive biases of the human brain.

Literature Cited

- Taleb, N. N., “The Black Swan: The Impact of the Highly Improbable,” Second edition, Random House, New York, NY (2010).

- Mann, R., “The 1931 China Flood Is One of the Deadliest Disasters, True Death Toll Unknown,” www.theweathernetwork.com/en/news/weather/severe/this-day-in-weather-history-august-18-1931-yangtze-river-peaks-in-china (Aug. 18, 2021).

- Britannica, “Japanese Airlines Flight 123,” www.britannica.com/event/Mount-Osutaka-airline-disaster (accessed Feb. 5, 2024).

- Worldometers, “COVID-19 Coronavirus Pandemic,” www.worldometers.info/coronavirus (accessed Feb. 5, 2024).

- Center for Chemical Process Safety, “CCPS Process Safety Glossary,” www.aiche.org/ccps/resources/glossary (accessed Feb. 5, 2024).

- American Psychological Association, “Heuristics,” Particularly Exciting Experiments in Psychology, www.apa.org/pubs/highlights/peeps/issue-105 (Nov. 9, 2021).

- American Psychological Association, “Bias,” https://dictionary.apa.org/bias (accessed Feb. 5, 2024).

- Kahneman, K., “Thinking, Fast and Slow,” Farrar, Straus and Giroux, New York, NY (2011).

- Cleaver, R. P., et al., “Modelling High Consequence, Low Probability Scenarios,” IChemE Symposium Series, No. 149, www.icheme.org/media/10066/xvii-paper-51.pdf (2003).

- LeBoeuf, R. A., and M. I. Norton, “Consequence-Cause Matching: Looking to the Consequences of Events to Infer their Causes,” Journal of Consumer Research, 39 (1), pp. 128–141 (2012).

- Broughton, E., “The Bhopal Disaster and Its Aftermath: A Review,” Environmental Health, 4, #6 (2005).

- Ibrion, M., et al., “Learning from Non-Failure of Onagawa Nuclear Power Station: An Accident Investigation Over Its Life Cycle,” Results in Engineering, 8, 100185 (2020).

- Pryor, P. C. M., “OHS Body of Knowledge – Models of Causation: Safety,” Australian Institute of Safety and Health, Kensington, Australia (2012).

- Stanovich, K. E., and R. F. West, “Individual Differences in Reasoning: Implications for the Rationality Debate?” Behavioral and Brain Sciences, 23 (5), pp. 645–665 (2000).

- Consumer Reports, “How Many Calories Are in Thanksgiving Dinner?” www.consumerreports.org/diet-nutrition/calories-in-your-thanksgiving-dinner (Nov. 17, 2018).

- Wason, P. C., “On the Failure to Eliminate Hypotheses in a Conceptual Task,” Quarterly Journal of Experimental Psychology, 12 (3), pp. 129–140 (1960).

- Shen, A., et al., “Kolmogorov Complexity and Algorithmic Randomness,” American Mathematical Society, Providence, RI (2007).

- Buchanan, M., “Why We Are All Creatures of Habit,” https://www.newscientist.com/article/mg19526111-700-why-we-are-all-creatures-of-habit (July 4, 2007).

- Kenton, W., “Gambler’s Fallacy: Overview and Examples,” www.investopedia.com/terms/g/gamblersfallacy.asp (Sept. 21, 2023).

- Tetlock, P. E., and D. Gardner, “Superforecasting: The Art and Science of Prediction,” Crown Publishing Group, New York, NY (2016).

Copyright Permissions

Would you like to reuse content from CEP Magazine? It’s easy to request permission to reuse content. Simply click here to connect instantly to licensing services, where you can choose from a list of options regarding how you would like to reuse the desired content and complete the transaction.