Analyze past decisions that led to incidents to avoid similar disasters and ensure positive outcomes of future critical decisions.

Everyone is a decision-maker. We all routinely make decisions as we go about our daily lives. The outcomes of our decisions — either positive or negative — can impact others. In most cases, the decisions go unnoticed because their impact is local or personal with minimal impact on others. In some cases, however, individual or group decisions can have a significant impact not only on the decision-makers, but also on the lives of many others. In the chemical process industries (CPI), this is particularly true when such decisions contribute directly or indirectly to disastrous outcomes, including loss of life, asset damage, environmental releases, or financial losses.

The elite sports world offers some insight into decision-making. High-performing teams integrate decision-making as a key component of training. They practice game-like situations to master the ability to make good decisions with positive outcomes. For example, athletes are taught through the paradigm of risk and reward. Players are not only taught how to assess risk in play, but also to read and react with situational awareness. Winning strategies incorporate rule sets that enhance the likelihood of positive outcomes from the many fast-paced decisions required during the course of a game.

When dealing with critical decisions, engineers can learn from the sports world. Whenever a decision can have a significant impact, the decision-making process becomes critical to minimize the potential for a poor decision to cause a disastrous outcome. This process must be risk-based and follow the principles of risk-based decision management (RBDM). This article provides the rationale and basis for such an approach.

Examples of critical decisions

Critical decisions can either prevent or contribute to disastrous outcomes. In many chemical incidents, unfortunately, critical decisions have been a contributing factor.

Macondo well blowout and explosion. The Deepwater Horizon drilling rig explosion and subsequent fire occurred on April 20, 2010 (1). The rig was drilling in the Macondo Prospect oil field about 40 mi (64 km) off the southeast coast of Louisiana. The explosion and subsequent fire sunk the Deepwater Horizon rig, killing 11 workers and injuring 17 others (Figure 1) (1). The blowout also caused an oil-well fire and a massive offshore oil spill of more than 4 million bbl in the Gulf of Mexico. The incident has been described as the largest accidental marine oil spill in the world and the largest environmental disaster in U.S. history.

▲Figure 1. An engineer made a disastrous decision to not test the integrity of a deep offshore well, which contributed to the largest environmental disaster in the U.S. — the Macondo well blowout incident. Source: (1).

Prior to the explosion, an engineer decided not to test the integrity of a very deep offshore well and canceled the integrity test (a cement bond log test), going against the approved work plan and established industry practices. As a result, methane gas that had been leaking into the well moved up the pipe to the rig, where it ignited.

Richmond refinery fire. On Aug. 6, 2012, a pipe in a crude unit atmospheric column catastrophically ruptured at the Chevron refinery in Richmond, CA (2,3). The pipe rupture occurred on a 52-in.-long section of an 8-in.-dia. line. At the time of the incident, light gas oil was flowing through the line at a rate of approximately 10,800 bbl/day. Six employees suffered minor injuries during the incident and subsequent emergency response efforts. In the weeks after the incident, nearly 15,000 people from the surrounding communities sought medical treatment at nearby medical facilities for various respiratory ailments, and approximately 20 of those people were admitted for treatment.

Prior to this incident, a plant manager ignored expert advice to replace piping on a product takeoff line in a crude distillation unit that had been severely corroded by a well-known mechanism. The same plant manager continued operations when leaks from the corroded piping were noticed, exposing emergency crews to unnecessary risk.

Texas City refinery explosion. On March 23, 2005, a hydrocarbon vapor cloud formed in an isomerization process unit that was starting up after a shutdown at BP’s refinery in Texas City, TX (4–6). The vapor cloud ignited and violently exploded, killing 15 workers, injuring 180 others, and severely damaging the refinery (Figure 2) (4).

▲Figure 2. A risk-assessment team violated company risk-management procedures and siting criteria when they allowed temporary office trailers to be placed in close proximity to hydrocarbon processing units that were temporarily offline. During startup of the units, an incident occurred that killed 15 workers in the nearby office trailers. Source: (4).

Long before the explosion occurred, a risk-assessment team approved the siting of temporary office facilities in close proximity to hydrocarbon processing units that were temporarily offline, violating the company’s risk procedures and facility siting criteria. All of the fatalities in this incident occurred in or near the temporary office facilities.

Longford gas plant explosion. On Sept. 25, 1998, the heated lean oil system in a heat exchanger failed at a gas plant (7). Without the flow of heated oil, low temperatures caused cold temperature embrittlement in the heat exchanger. When heated oil was reintroduced into the heat exchanger, a brittle fracture released a large cloud of hydrocarbon vapors. The vapor cloud ignited (without causing an explosion) and set off a fierce jet fire that lasted for two days.

In this incident, the company’s engineering department — which was responsible for maintaining corporate safety standards and good engineering practices — failed to conduct a hazard and operability (HAZOP) analysis of the changes made to the gas plant.

Bhopal chemical plant release. A major gas leak occurred at the Union Carbide India Ltd. pesticide plant in Bhopal, India, on Dec. 2, 1984 (8). Highly toxic methyl isocyanate gas spread to nearby small towns and affected over half a million people. The official immediate death toll was 2,259, but experts estimate the total number of deaths to be much higher. Union Carbide Corp., a major international chemical company, compromised its corporate safety standards and good engineering practices when operating the Bhopal chemical plant.

Although poor decisions with disastrous outcomes gain prominence because of their visibility, it is important to recognize that critical decisions can also prevent disastrous outcomes. Decisions with positive outcomes go largely unnoticed and are unlikely to make headlines. Reports from company personnel that have made critical decisions with positive outcomes, or have experienced the benefits of other employees’ good choices, provide good examples. In many cases, these stories are anecdotal, but they are important to recognize nonetheless.

Terra Nova offshore oil platform. The Terra Nova floating production storage and offloading (FPSO) vessel is located in the Terra Nova oil and gas field, approximately 220 mi (350 km) off the coast of Newfoundland, Canada, in the North Atlantic Ocean (Figure 3). It produces oil from subsea wells that are connected to the vessel with flexible flowlines or risers.

▲Figure 3. A good decision was made to delay the startup of the Terra Nova floating production storage and offloading (FPSO) vessel when a pressure balance safety joint unexpectedly failed, avoiding a disastrous oil spill. Image courtesy of Terra Nova Alliance.

A senior vice president of operations delayed startup of the multibillion-dollar offshore oil production facility when a pressure balance safety joint failed on a flow line. His decision to delay oil production prevented the company from receiving a significant tax credit, but ignoring the failure and proceeding with startup could have caused a disastrous oil spill.

Dolphin Energy gas project. The Dolphin Energy gas project began operations in 2007 to recover gas reserves off the coast of Qatar. The recovered gas is transported to an onshore gas processing plant. After processing, the gas is transported through a 48-in. subsea pipeline to the United Arab Emirates and then to Oman. The Dolphin Energy project is one of the largest energy-related ventures operating in the region.

A vice president of operations stopped production — risking significant penalties for not achieving annual production targets — when an overhead line from the condensate stabilization unit developed a leak (Figure 4), threatening workers with exposure to high levels of hydrogen sulfide. Operations resumed at reduced capacity and pressure only after a risk assessment was conducted and interim mitigation measures were identified and implemented.

▲Figure 4. A potentially disastrous incident was avoided at one of the largest energy-related ventures in the Middle East — the Dolphin Energy gas project. Management made a critical decision to stop production when a minor leak developed in an overhead line. Image courtesy of Dolphin Energy.

Learning from disastrous decisions

Although extremely important, RBDM or critical-decision analyses are currently not taught in engineering curricula. In the workplace, engineers and other technical staff are promoted based on their abilities and the assumption that their experience will guide them to make robust decisions when needed, particularly when time is of the essence. However, the large number of incidents that have occurred at CPI facilities calls this assumption into question.

Traditionally, companies in the CPI have not learned from past incidents, as evidenced by the number of occurrences of similar incidents. Many disasters have occurred and reoccurred because of questionable and even poor decisions made at a critical time. Multiple factors come into play when key decisions are being made and these further complicate the issues at hand. Some of these factors include:

- time and/or cost pressures

- imprecise, insufficient, or conflicting information

- cultural biases

- the individual’s inability or lack of self-efficacy when placed in critical situations

- the experience of the decision-makers.

Analyzing incidents using the tenets of RBDM reveals the significant contributing factors. This provides a better understanding of what went wrong and increases the chances of learning from past incidents.

To determine the most significant factors that have historically played a role in safety incidents, we evaluated eleven past disasters (1–14):

- Flixborough plant explosion (June 1, 1974)

- Bhopal chemical plant release (Dec. 3, 1984)

- Piper Alpha oil platform explosion (July 6, 1988)

- Pasadena polyethylene plant explosion (Oct. 23, 1989)

- Longford gas plant explosion (Sept. 25, 1998)

- Petrobras 36 (P-36) oil platform explosion (March 20, 2001)

- Texas City refinery explosion (March 23, 2005)

- Point Comfort propylene explosion (Oct. 6, 2005)

- Macondo well blowout and explosion (April 20, 2010)

- Richmond refinery fire (Aug. 6, 2012)

- Geismar olefins plant explosion and fire (June 13, 2013).

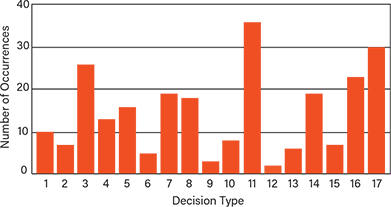

For each incident, we created a decision analysis matrix to investigate the decisions that indirectly or directly caused the incident. (In the next section, we explore how to create such matrices.) Interestingly, analyzing these past safety incidents revealed several common themes (Table 1). Some themes occurred more frequently than others (Figure 5).

| Table 1. Identifying the common themes of chemical incidents helps to inform better decisions and prevent similar disastrous outcomes. | |

| Decision Type | Decision Theme |

| 1 | Significantly changing the work execution plans without approvals |

| 2 | Making impactful decisions that are well beyond one’s responsibilities |

| 3 | Not conducting revalidation risk assessments (i.e., failure to capture changing circumstances and situational creep) |

| 4 | Ignoring the advice of subject matter experts |

| 5 | Not following established management of change (MOC) processes |

| 6 | Keeping safety-critical equipment offline longer than necessary |

| 7 | Practicing poor control of work and handover management |

| 8 | Failing to recognize and respond effectively to emergency situations, including not having emergency response (ER) plans and not conducting ER exercises |

| 9 | Poorly managing incentive programs |

| 10 | Not maintaining robust communication protocols |

| 11 | Not following established engineering standards and norms |

| 12 | Not ensuring that all third-party-supplied safety-critical equipment is performance tested and verified prior to use |

| 13 | Failing to inform senior management (i.e., preventing bad news from reaching upper management) |

| 14 | Cost-cutting without assessment of impacts on operations and staff |

| 15 | Failing to learn from recent similar incidents |

| 16 | Being pressured to maintain production at the expense of safety |

| 17 | Lacking adequate technical operational expertise and/or lacking sufficient training |

▲Figure 5. Learning from reoccurring disastrous decisions is a practical approach to improving critical decisions. The most common types of decisions in our incident analyses were not following established engineering standards and norms, lacking adequate technical operational expertise and/or lacking sufficient training, and not conducting revalidation risk assessments.

Identifying and avoiding these common types of decisions can reduce the possibility of negative outcomes and help decision-makers make more rational decisions during engineering projects or operations. Analyzing each type of decision and developing a rationale as to why it must be avoided can reduce the probability of making similar disastrous decisions.

Creating a decisions analysis matrix

A decision analysis matrix reviews the key decisions that contributed directly or indirectly to the disastrous outcome. The decision analysis focuses on the decisions that were made leading up to the incident. The matrix should include a column for the immediate impact of the decision, the factors that contributed over a period of time, and an alternative, better decision that could have been made. The last column of each decision matrix characterizes the type(s) of decision according to Table 1.

Tables 2 and 3 are decision analysis matrices for the Macondo well blowout and the Longford gas plant explosion, respectively. The decisions outlined in Table 1 involved significantly changing the work execution plans without approvals (Type 1) and practicing poor control of work and handover management (Type 7). The Longford explosion was the result of many types of decisions, including ignoring the advice of subject matter experts (Type 4), not following established management of change (MOC) processes (Type 5), not following established engineering standards and norms (Type 11), cost cutting without assessments of impacts on operations and staff (Type 14), failing to learn from recent similar incidents (Type 15), and lacking adequate technical operational expertise and/or lacking sufficient training (Type 17).

Decision classification. Decisions that were made in the course of each incident are categorized according to their relative contribution or influence on the incident. In order of decreasing influence, the decisions are classified as critical, preventive, mitigative, or contributing.

Critical decisions are decisions that directly contribute to an incident. If the critical decision was not made, then the incident could have been prevented entirely. For example, the decision to not conduct a cement bond log (CBL) test after performing cement work was a critical decision in the Macondo incident (Table 2). A CBL would have confirmed that the well cement was not bonded and the integrity could not be assured.

| Table 2. An incident decision matrix is a useful tool for analyzing the key decisions that directly or indirectly caused an incident. This incident decision matrix summarizes the decisions that contributed to the Macondo well blowout. | ||||||

| Disastrous Decision | Decision Significance | Rationale | Factors that Contributed Over Time | Immediate Impact | Improved Decision | Decision Type |

| Dismissing Halliburton’s recommendation to use 21 centralizers to ensure that the production casing ran down the center of the well bore to minimize channel formation | Significant | Avoid delays and extra costs | The cement slurry failed to rise uniformly between the casing and the borehole wall, creating channels (or spaces) that can contribute to a well blowout | None — the impact of channeling would only come into play during a well incident | Install the number of centralizers that was recommended by Halliburton and confirmed by models | 1 |

| Choosing a single-well design over a tie-back design, which had more barriers to prevent gas from flowing up to the outside of the well | Significant | Reduce costs | High-pressure gas that entered the bottom of the well was able to escape to the outside of the well more easily | None — the impact of channeling would only come into play during a well incident | Invest in the robust tie-back design that provides extra layers of protection | 1 |

| Rewarding production and cost reduction while not incentivizing safety, creating pressure to work fast and take risks | Significant | Increase production | Risk taking was incentivized over safety | None — the company culture of cutting corners persisted and eventually had a direct impact on the disaster | Provide balanced incentives for both safety and cost reduction goals Conduct risk assessments to ensure that cost reduction incentives do not adversely affect safety | 7 |

| Initiating the cement process without reviewing the cement design or waiting for test results | Critical | Avoid delays | Important steps were ignored to stay on schedule | The unstable cement was a significant factor in the well blowout and caused the disaster | Follow the established plan and wait for test results before beginning critical work | 1 |

Preventive decisions involve the integrity of the prevention system and either compromise existing barriers or fail to implement appropriate barriers. Preventive decisions increase the likelihood of the incident occurring, but they do not necessarily cause the significant consequences on their own. For example, the use of a single-well design was chosen for the Macondo well because it was cheaper, but it only provided two barriers to prevent gas from flowing up the outside of the well production tubing (Table 2). A more robust decision would have been to use a liner tie-back design, which provides additional barriers or layers of protection against a gas entering the casing and causing a well blowout.

Mitigative decisions are those that have the potential to worsen the consequences of the incident. For example, in the Texas City incident, the decision to proceed with startup knowing that the level indication instrumentation in the vessels was not functional compromised the integrity of the startup, since the measurements that would have been able to mitigate the effects of high level and vessel overflow (i.e., stop fluid pumping) could not be relied upon. Had the instrumentation been reliable, the operator would have abandoned the startup.

Contributing decisions are important decisions that may have had an indirect effect on the incident. For example, the decision to not conduct a HAZOP study of the changes made to the gas plant at Longford was a contributing decision (Table 3). A HAZOP analysis would have identified the possibility of heat exchanger embrittlement in a flooded tower scenario and revealed the need for additional protection measures.

| Table 3. This incident decision matrix breaks down the decisions that contributed to the Longford gas explosion. | ||||||

| Disastrous Decision | Decision Significance | Rationale | Factors that Contributed Over Time | Immediate Impact | Improved Decision | Decision Type |

| Ignoring engineering standards and practices and failing to conduct HAZOP studies at the gas plant facility | Important | Disregard for the importance of risk assessments | Hazards related to maintaining the temperature profile in the de-ethanizer column were not understood | None — it was not until a major upset occurred that a lack of understanding of the tower cooling effects was revealed, directly causing the disaster | Follow engineering standards and practices, which includes conducting a HAZOP study to identify hazards and operational concerns | 5, 11 |

| Relocating all engineering staff from the site to Melbourne, which was several hundred miles away | Important | Reduce costs | Engineers lost day-to-day familiarization with the operation over time, and onsite engineering support and expertise were unavailable | None — it was not until a major upset occurred that a lack of understanding of the tower cooling effects was revealed, directly causing the disaster | Assign a core staff of process engineers on-site to support operations and to conduct risk assessments | 14 |

| Poorly training personnel and failing to address hazardous scenarios | Important | Reduce costs | Operators were unaware of the critical need to prevent the rich oil system from reaching low temperatures during an upset | None — it was not until a major upset occurred that a lack of understanding of the tower cooling effects was revealed, directly causing the disaster | Provide incident-based training for all operators | 4, 14, 17 |

| Not identifying significant issues, such as a lack of risk assessments, with an effective audit program | Important | Change in audit program | Plant personnel were under the impression that procedures and safety were satisfactory | None — it was not until a major upset occurred that the lack of feedback from audits was revealed | Assign experienced technical members to the audit team with the task of identifying areas of improvement | 15 |

| Not conducting detailed investigations of incidents that occurred | Important | Reduce costs | Opportunities were not available to learn from process upsets and standard operating procedures (SOPs) were not updated | None — it was not until a major upset occurred that a lack of understanding of the tower cooling effects was revealed, directly causing the disaster | Conduct thorough investigations of all incidents at Longford and other similar operations Update SOPs as warranted | 15, 17 |

Different scalar weighting factors apply to each decision category. These weighting factors are the logarithm of safety integrated system (SIS) risk-reduction factors: 10–4 for critical decisions, 10–2 for preventive and mitigative decisions, and 10–1 for contributing decisions. The factors indicate the probability of the event occurring.

Decision types. The incident analyses indicated that 17 different types of decisions (Table 1) are common in chemical disasters. Avoiding and learning from these — especially the most frequently occurring ones (Figure 5) — can significantly improve the outcomes of critical decisions.

The most important types of decisions to learn from and avoid are:

- not following established engineering standards and norms (Type 11)

- lacking adequate technical operational expertise and/or lacking sufficient training (Type 17)

- not conducting revalidation risk assessments (i.e., failure to capture changing circumstances and situational creep) (Type 3).

These would likely be the most frequent types of critical decisions regardless of the number of disastrous incidents included in the analysis.

Incorporating decision types into risk-assessments

The risk-assessment process is one of the most important tools available for providing input to decisions. A rigorous risk assessment that follows established protocols and procedures allows engineers and management to compare the potential impacts of a range of possible decisions. As such, the risk assessment is a critical input to the decision-making process and should be performed for all CPI processes and facilities.

To aid in this effort, consider decision types when starting a risk assessment. A rigorous risk assessment helps the decision-maker to avoid moving into the zone of uncertainty or making assumptions that are not warranted by the circumstances. Just as top athletes need coaching to improve performance, so also do engineers need coaching in risk-based decision management, as well as the overall risk assessment process.

In closing

Analyzing chemical incidents reveals common trends in decision-making that have contributed to disastrous outcomes. These trends can be used to assist in risk assessments to improve the overall decision-making process. The types of critical decisions discussed here should be reviewed by key facility safety personnel so they can recognize and avoid them, which will significantly reduce the probability of a disastrous outcome in the future.

Acknowledgments

The author thanks the graduate students from the Mary Kay O'Connor Process Safety Center (MKOPSC) at Texas A&M Univ. who contributed to this work: Sundar Janardanan, Edna Mendez, Prerna Jain, Jiayong Zhu, Shuai Yuan, Mengxi Yu, Hallie Graham, Yueqi Shen, Harold Escobar, Pranav Kannan, Pratik Krishnan, Jingyao Wang, Denis Su, Lubna Ahmed,, Chris Kwadwo Gordon, Sankhadeep Sarkar, Lin Zhao, Nilesh Ade.

Literature Cited

- U.S. Chemical Safety and Hazard Investigation Board, “Macondo Blowout and Explosion,” Report No. 2010-10-I-OS, CSB, Washington, DC (2016).

- U.S. Occupational Safety and Health Administration, “Phillips 66 Company Houston Chemical Complex Explosion and Fire,” U.S. Dept. of Labor, Washington, DC (Apr. 1990).

- Yates, J., “Phillips Petroleum Chemical Plant Explosion and Fire,” U.S. Fire Administration, Pasadena, TX (1989).

- U.S. Chemical Safety and Hazard Investigation Board, “BP America Refinery Explosion,” Report No. 2005-04-I-TX, CSB, Washington, DC (2007).

- Holmstrom, D., et al., “CSB Investigation of the Explosions and Fire at the BP Texas City Refinery on March 23, 2005,” Process Safety Progress, 25 (4), pp. 345–349 (2006).

- Hopkins, A., “Failure to Learn: The BP Texas City Refinery Disaster,” CCH Australia, Sydney, Australia (2008).

- Hopkins, A., and H. Andrew, “Lessons from Longford: The Esso Gas Plant Explosion,” CCH Australia, Sydney, Australia (2000).

- Broughton, E., “The Bhopal Disaster and its Aftermath: A Review,” Environmental Health, 4 (1), doi: 10.1186/1476-069x-4-6 (2005).

- Sanders, R. E., “Management of Change in Chemical Plants: Learning from Case Histories,” Butterworth-Heinemann, Oxford, U.K. (1993).

- Barusco, P., “The Accident of P-36 FPS,” presented at the 2002 Offshore Technology Conference, Houston, TX (2002).

- National Aeronautics and Space Administration, “That Sinking Feeling,” Systems Failure Case Studies,2 (8), NASA, Washington, DC (Oct. 2008).

- U.S. Environment Protection Agency, “Giant Oil Rig Sinks,” Journal of the U.S. EPA Oil Program Center,5 (2001).

- Cullen, W. D., “The Public Inquiry into the Piper Alpha Disaster,” Drilling Contractor, 49 (4) (July 1993).

- Drysdale, D., and Sylvester-Evans, R., “The Explosion and Fire on the Piper Alpha Platform, 6 July 1988. A Case Study.” Philosophical Transactions of the Royal Society of London. Series A: Mathematical, Physical, and Engineering,356 (1748), pp. 2929–2951 (1998).

Copyright Permissions

Would you like to reuse content from CEP Magazine? It’s easy to request permission to reuse content. Simply click here to connect instantly to licensing services, where you can choose from a list of options regarding how you would like to reuse the desired content and complete the transaction.